Automatic Generation of Artificial Space Weather Forecast Product Based on Sequence-to-sequence Model

doi: 10.11728/cjss2024.01.2023-0029 cstr: 32142.14.cjss2024.01.2023-0029

-

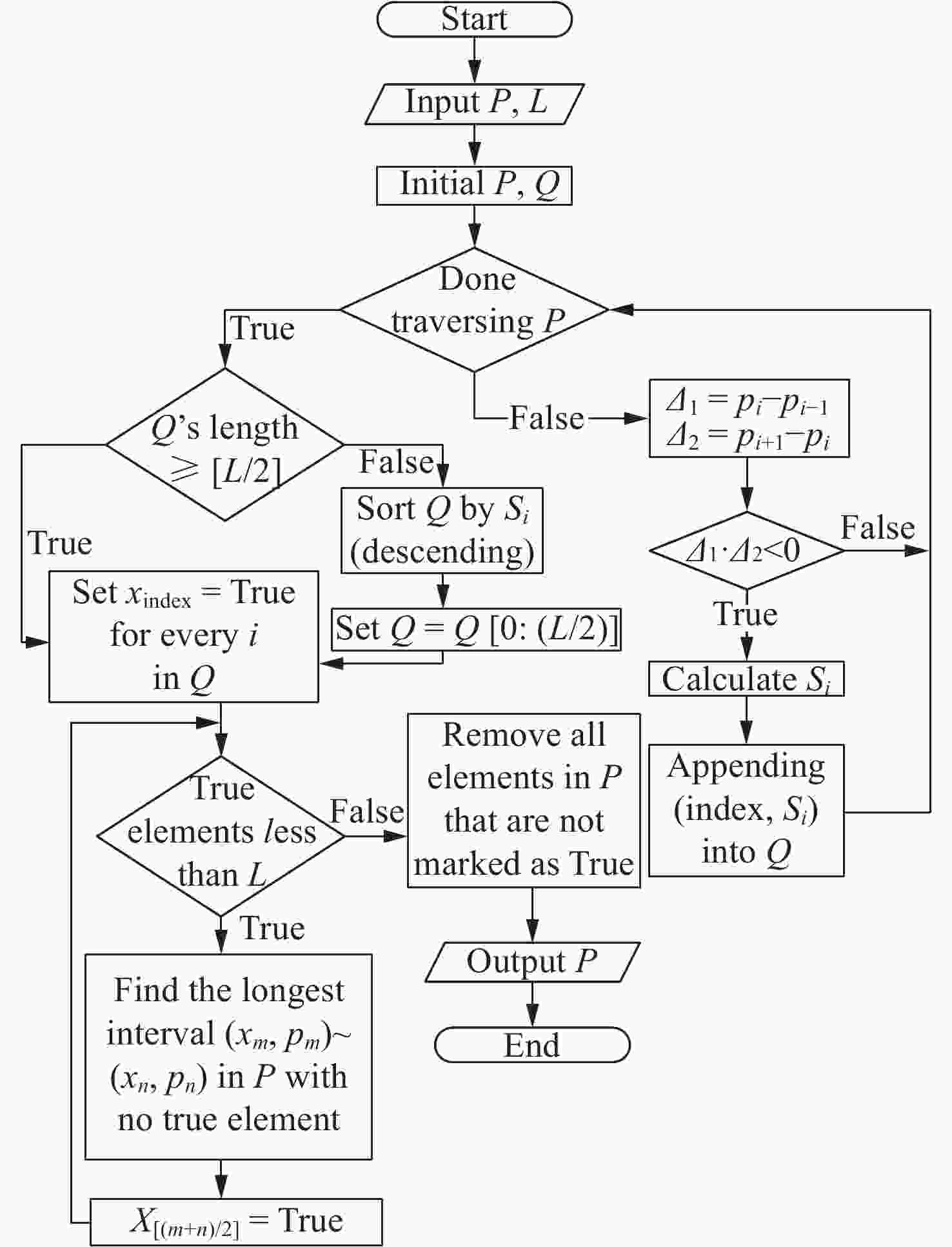

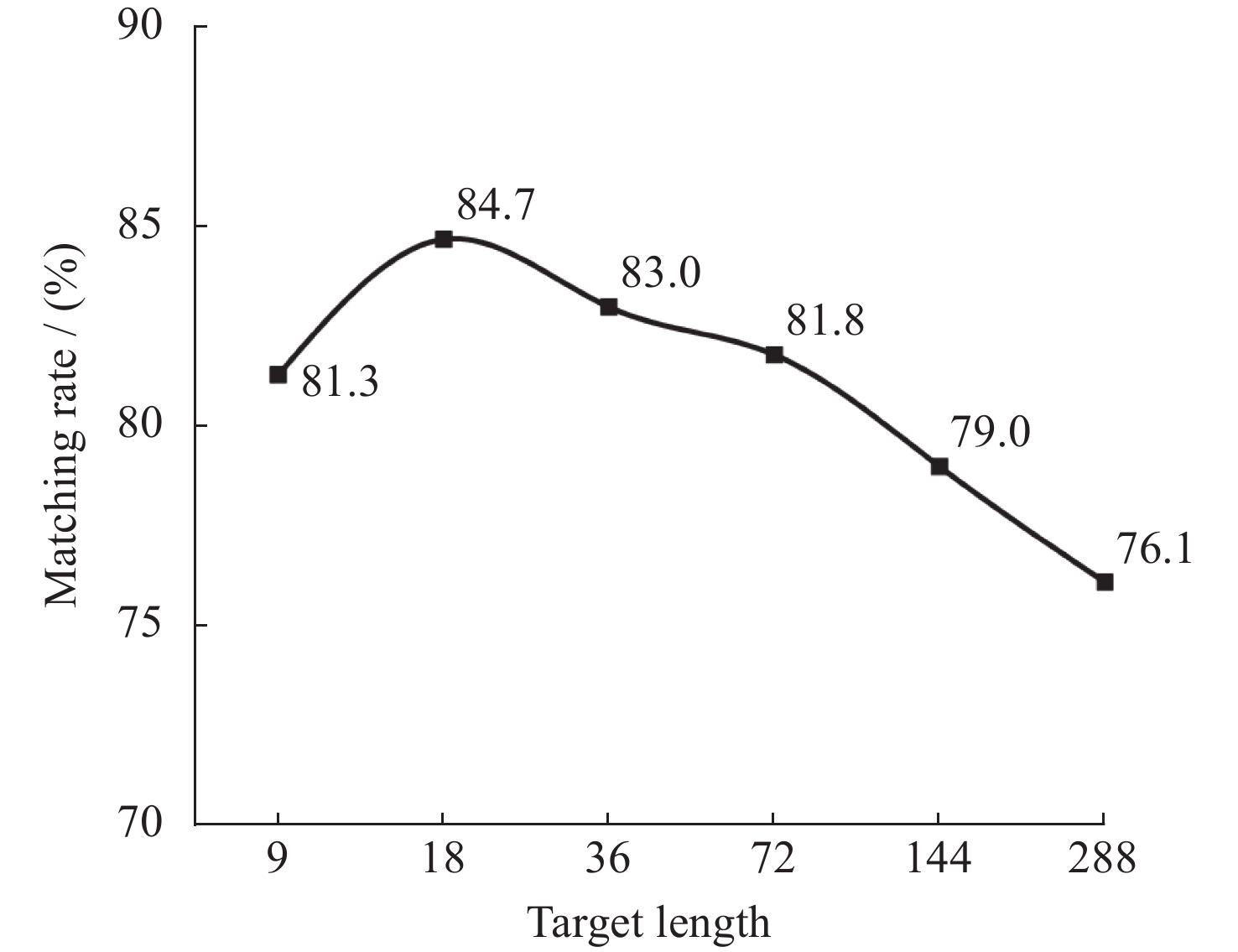

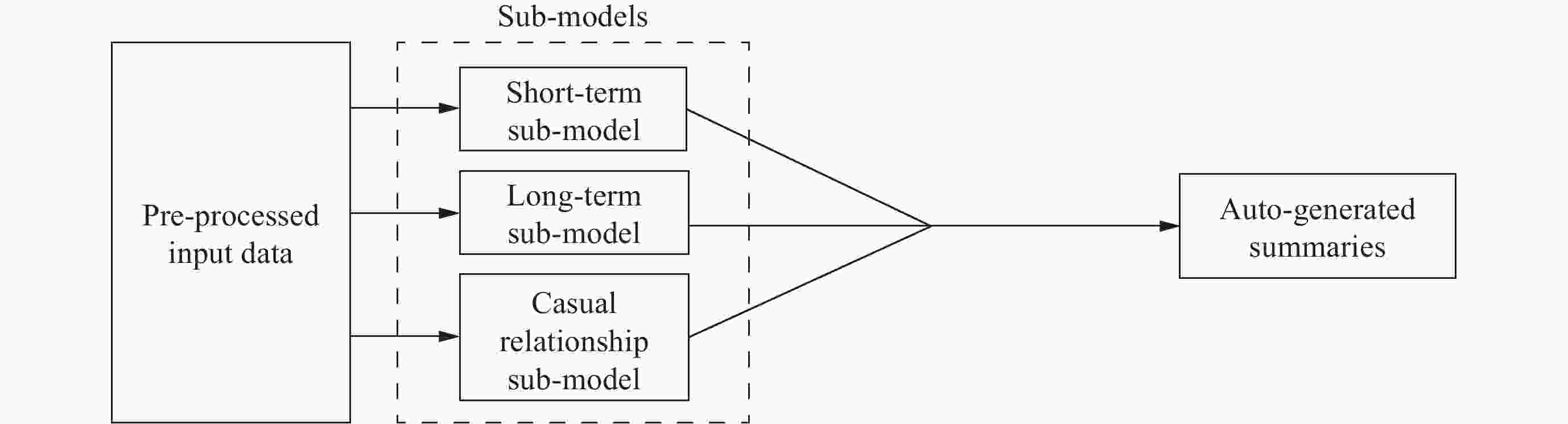

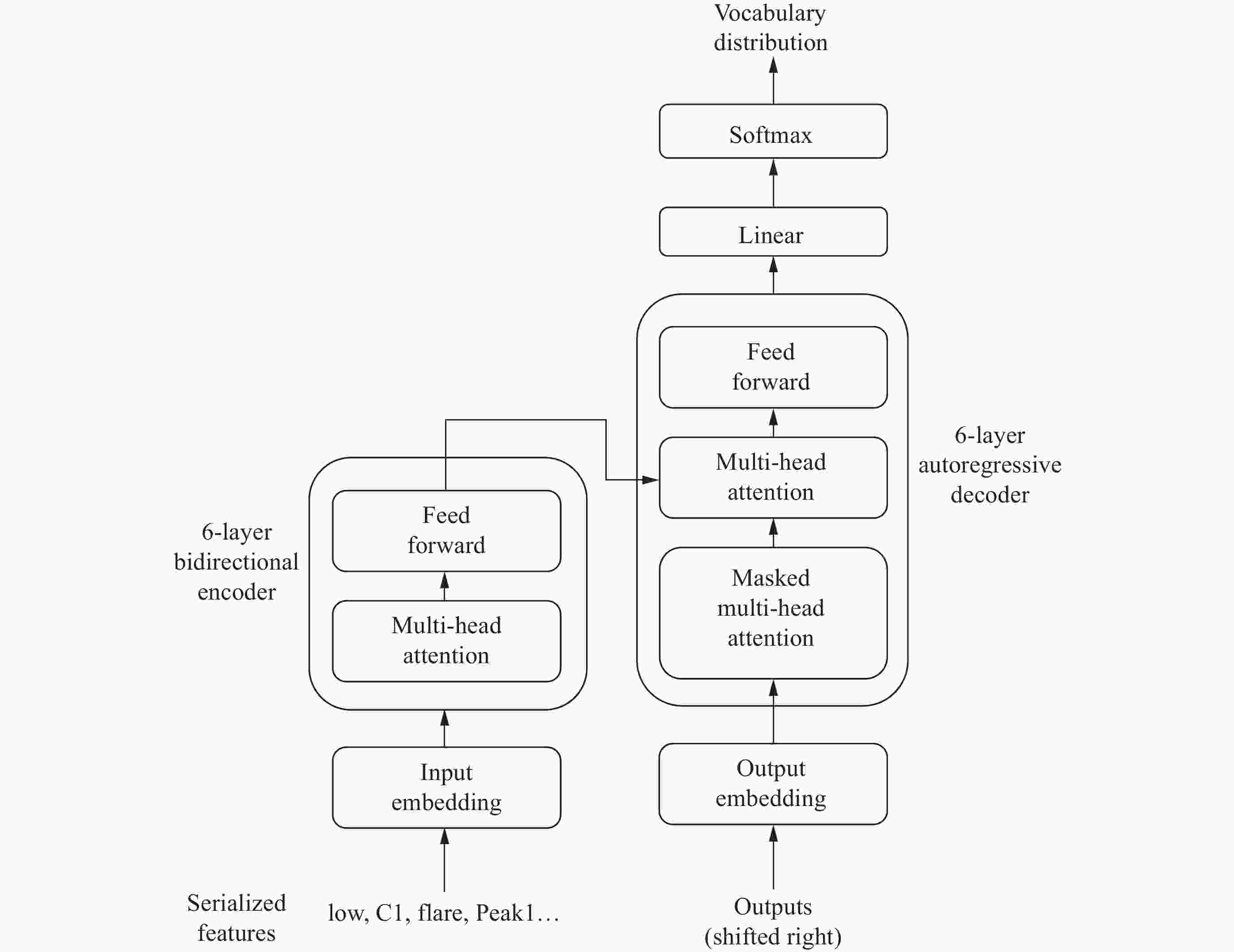

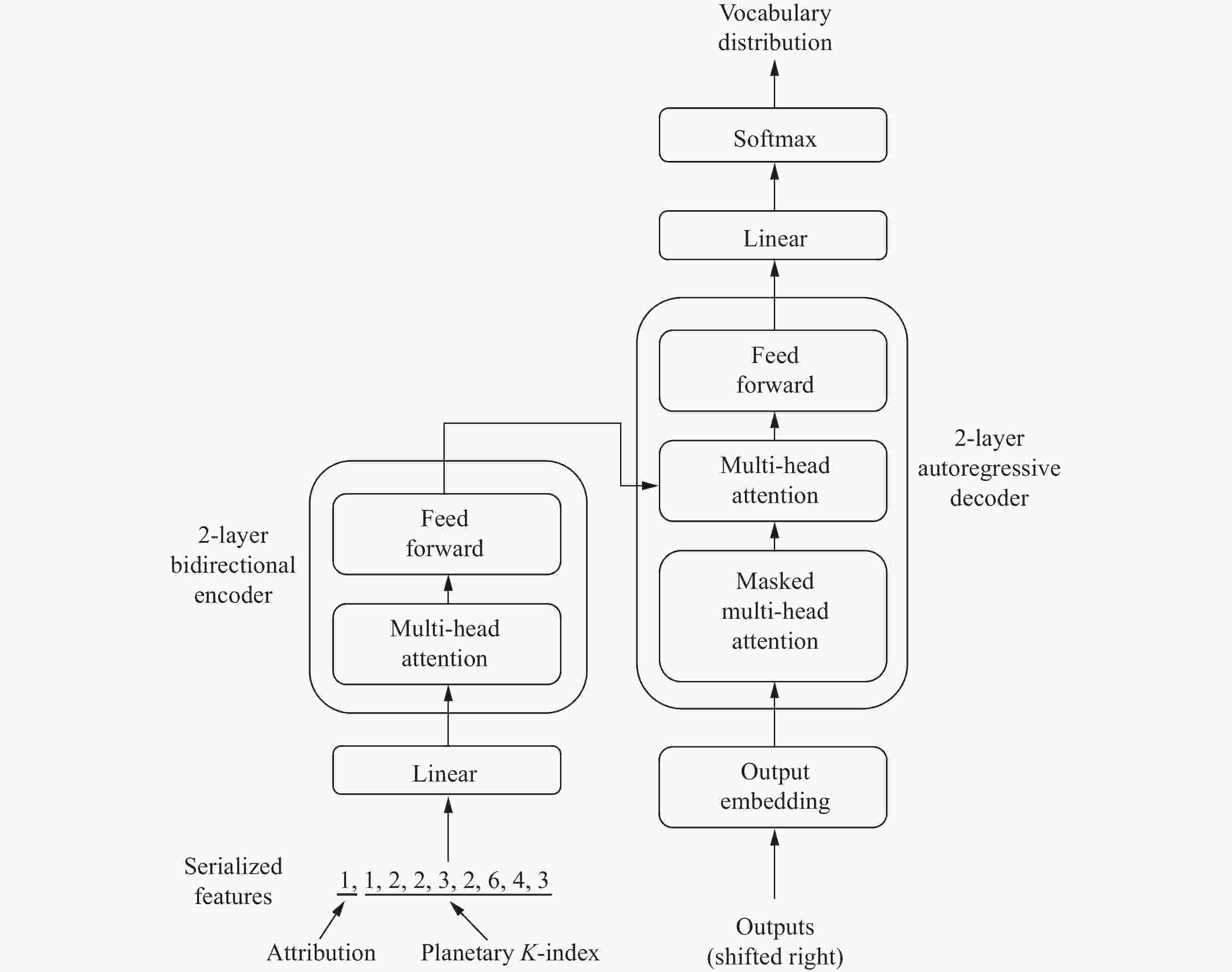

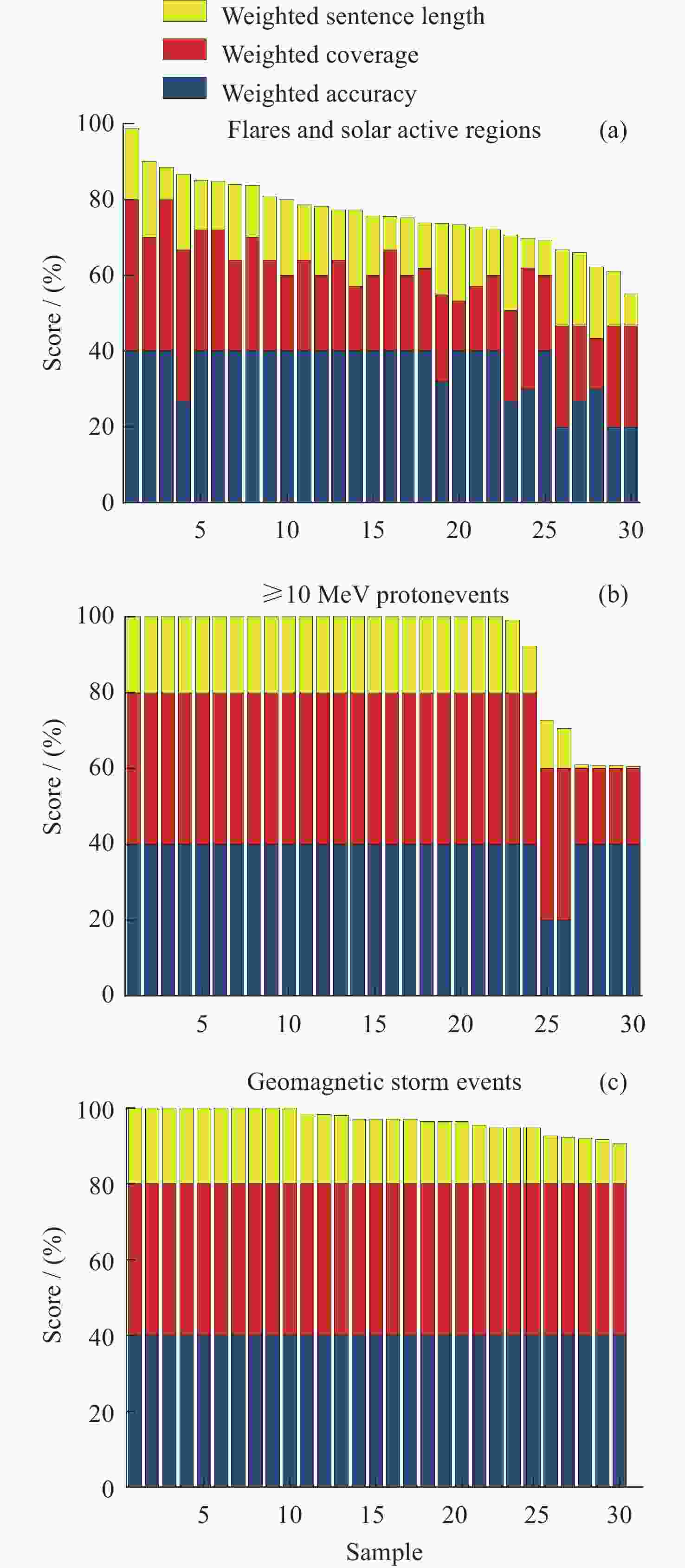

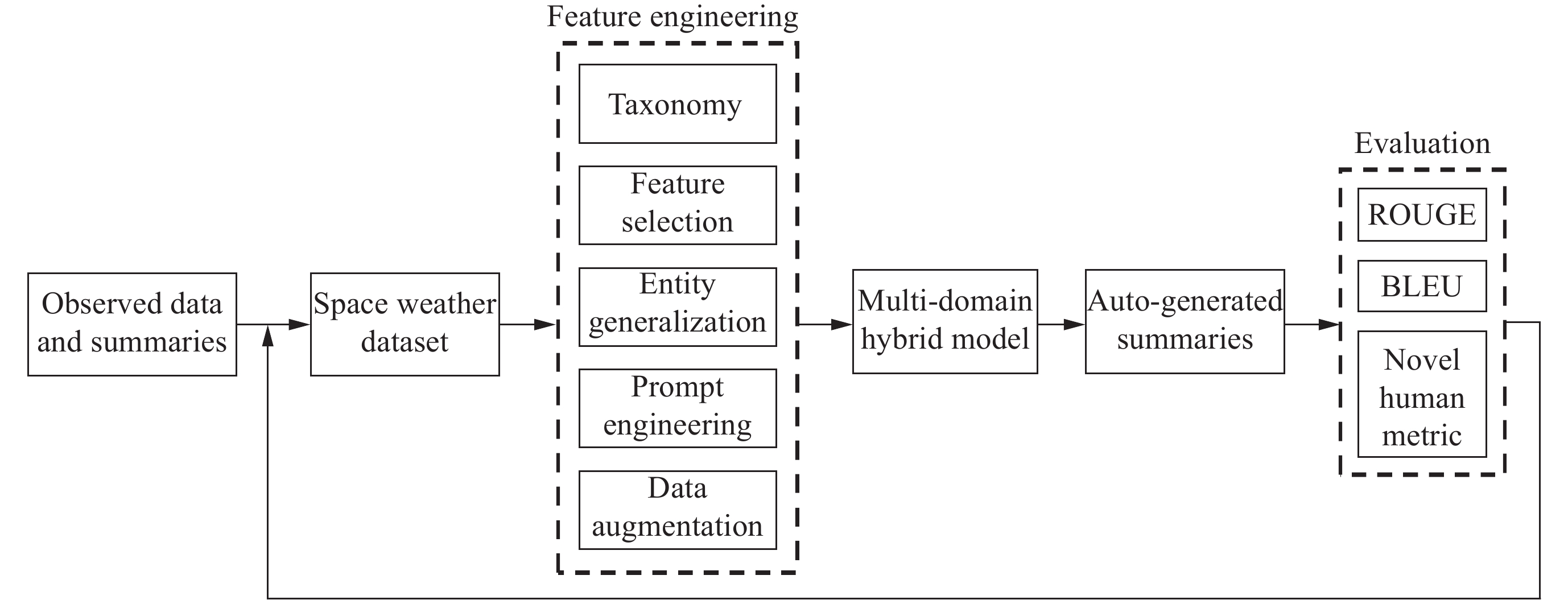

Abstract: Both analyzing a large amount of space weather observed data and alleviating personal experience bias are significant challenges in generating artificial space weather forecast products. With the use of natural language generation methods based on the sequence-to-sequence model, space weather forecast texts can be automatically generated. To conduct our generation tasks at a fine-grained level, a taxonomy of space weather phenomena based on descriptions is presented. Then, our MDH (Multi-Domain Hybrid) model is proposed for generating space weather summaries in two stages. This model is composed of three sequence-to-sequence-based deep neural network sub-models (one Bidirectional Auto-Regressive Transformers pre-trained model and two Transformer models). Then, to evaluate how well MDH performs, quality evaluation metrics based on two prevalent automatic metrics and our innovative human metric are presented. The comprehensive scores of the three summaries generating tasks on testing datasets are 70.87, 93.50, and 92.69, respectively. The results suggest that MDH can generate space weather summaries with high accuracy and coherence, as well as suitable length, which can assist forecasters in generating high-quality space weather forecast products, despite the data being starved.

-

Key words:

- Space weather /

- Deep learning /

- Data-to-text /

- Natural language generation

-

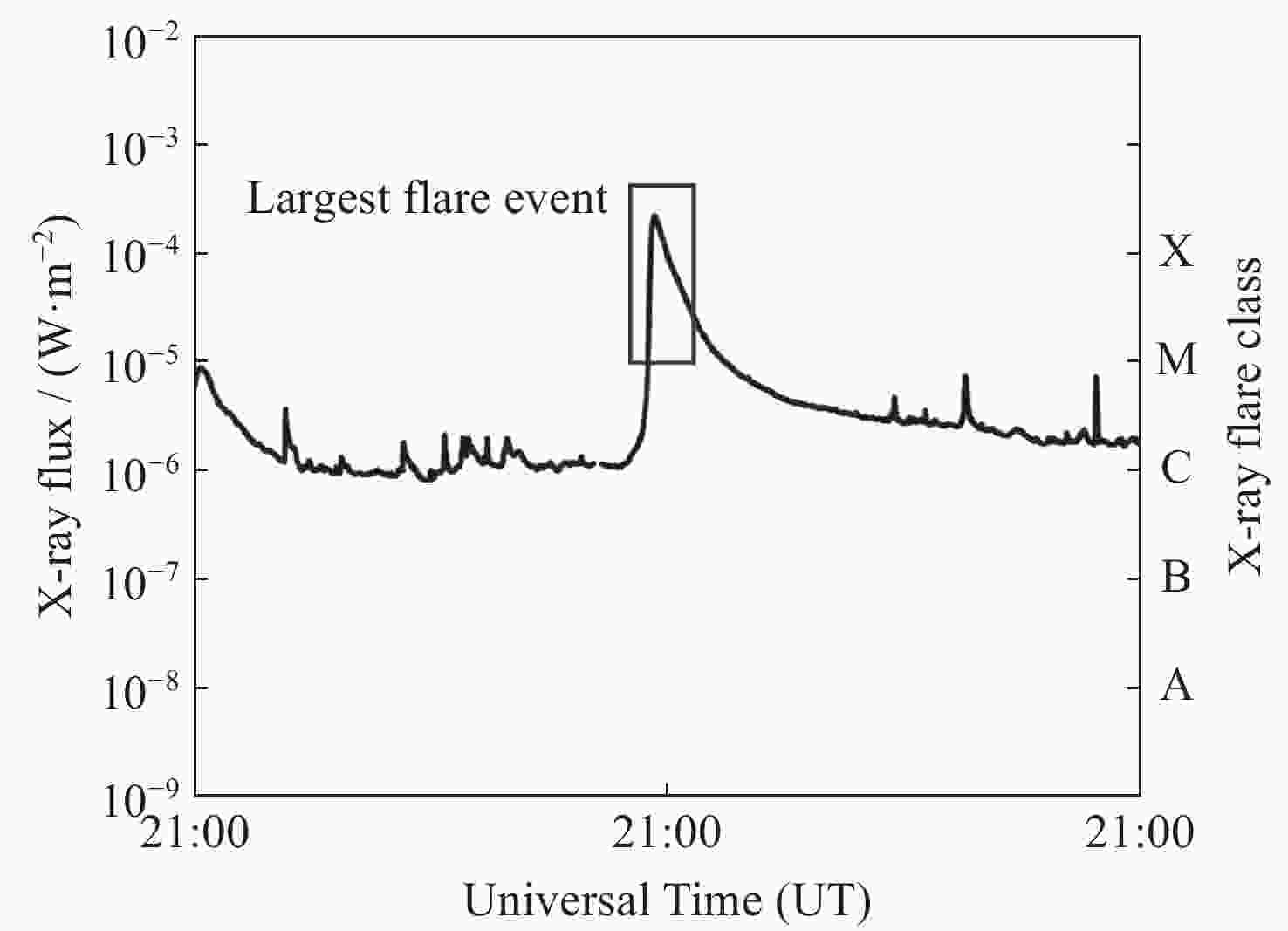

Table 1. Taxonomy of space weather phenomena, separating space weather phenomena into three domains

Domain Typical phenomena Short-term Flares and solar active regions, sudden impulses, solar wind,

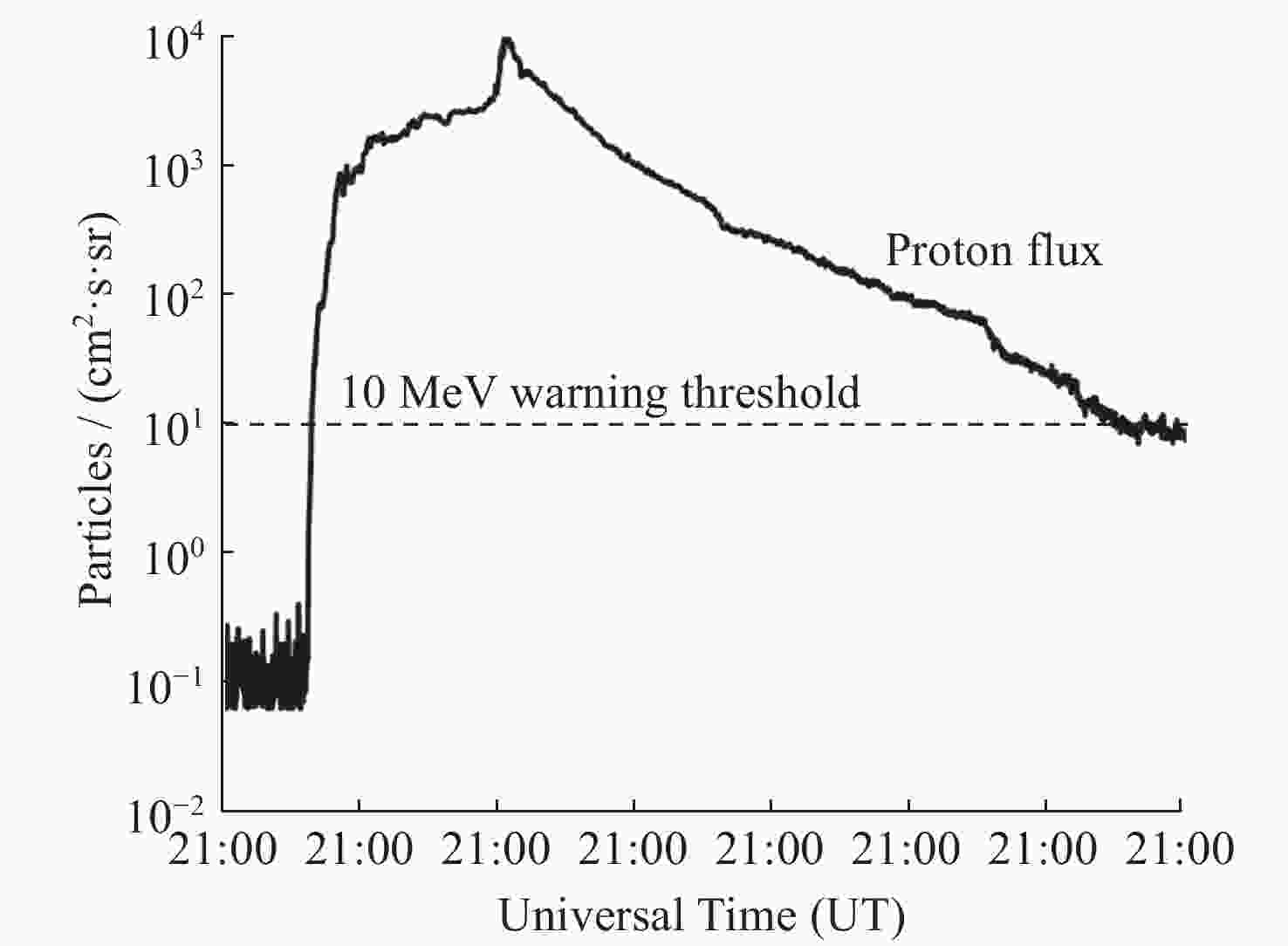

≥2 MeV electron fluxLong-term ≥10 MeV proton events, forbush decrease events Causal relationship Geomagnetic storms caused by high-speed coronal hole stream Table 2. Features of flare events and solar active regions

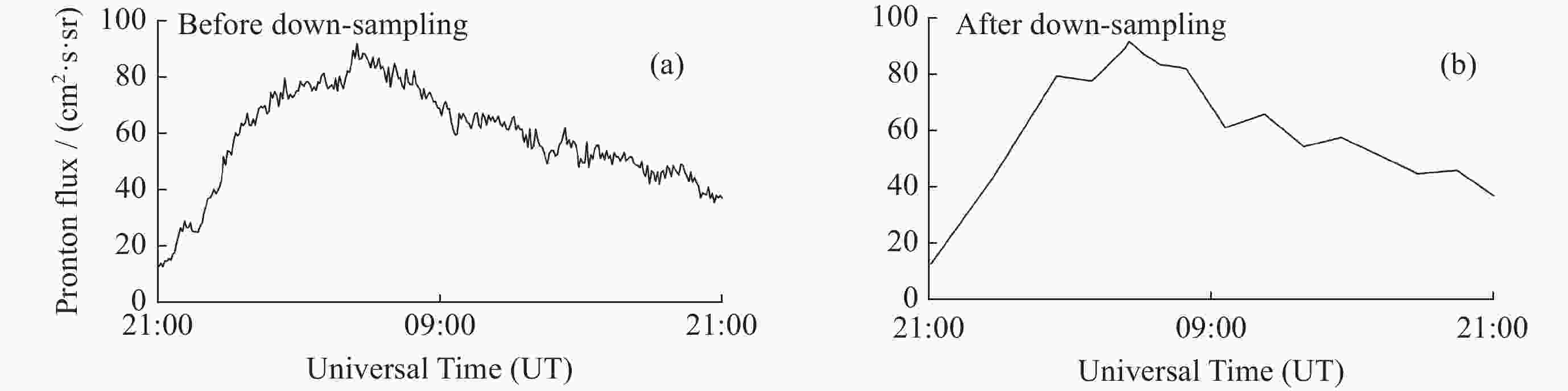

Input features Details Solar active regions Solar active regions in the past 24 h, containing area, evolution, serial number, coordinates Flares Flare records in the past 24 h, containing grade, duration, peak time, serial number, and coordinate of the source region Table 3. Features of greater than 10 MeV proton events

Input features Details ≥10 MeV proton flux GOES proton flux 5-minute data in the past 24 h Proton event record Last proton event record, containing beginning time, peak flux, peak time, end time (if ended) Table 4. Features of geomagnetic storm events

Input features Details Planetary K-index Estimated planetary K-index 3-hour data in last 24 h Attribution An integer value: 1 stands for high-speed coronal hole stream is the source of the event

0 stands for not unrelated to any high-speed coronal hole streamTable 5. Descriptors of proton event trend patterns

Pattern Trend descriptors Increase increase, rise, exceed Decrease decrease, decline, decay, drop Table 6. Decision rules of solar activity level in the past 24 h

Flare Activity level No flare is higher than B grade Very low Highest flare is at C grade Low Number of flares at M1.0~M4.9 is between 1~4, and there is no flare higher than M4.9 Moderate Number of flares at M1.0~M4.9 is over 4, or there is a flare over M4.9 High Table 7. Results of automatic evaluation

Task ROUGE-1 ROUGE-L BLEU Flares and solar active regions 38.69 36.60 33.01 ≥10 MeV proton events 96.32 96.20 96.13 Geomagnetic storm events 79.63 83.73 76.93 Table 8. Result of minor solar activities’ ablation

Minor solar activities ROUGE-1 ROUGE-L BLEU With 38.69 36.60 33.01 Without 64.11 61.29 61.61 Table 9. Results of human evaluation

Task Accuracy Coverage Sentence length Score Flares and solar active regions 89.34 77.95 77.75 82.47 ≥10 MeV proton events 96.67 93.33 82.97 92.59 Geomagnetic storm events 100.00 100.00 84.46 96.89 Table 10. Confusion matrix of ≥10 MeV proton events summaries

Observed positive Observed negative Described positive 8 0 Described negative 0 22 Table 11. Comprehensive scores

Task Comprehensive score Flares and solar active regions 70.87 ≥10 MeV proton events 93.50 Geomagnetic storm events 92.69 -

[1] LILENSTEN J, BELEHAKI A. Developing the scientific basis for monitoring, modelling and predicting space weather[J]. Acta Geophysica, 2009, 57(1): 1-14 doi: 10.2478/s11600-008-0081-3 [2] SINGH A K, BHARGAWA A, SIINGH D, et al. Physics of space weather phenomena: a review[J]. Geosciences, 2021, 11(7): 286 doi: 10.3390/geosciences11070286 [3] GOLDBERG E, DRIEDGER N, KITTREDGE R I. Using natural-language processing to produce weather forecasts[J]. IEEE Expert, 1994, 9(2): 45-53 doi: 10.1109/64.294135 [4] REITER E, SRIPADA S G, HUNTER J, et al. Choosing words in computer-generated weather forecasts[J]. Artificial Intelligence, 2005, 167(1/2): 137-169 [5] XING X Y, WAN X J. Structure-aware pre-training for table-to-text generation[C]//Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021. Online: Association for Computational Linguistics, 2021: 2273-2278 [6] MEI H Y, BANSAL M, WALTER M R. What to talk about and how? Selective generation using LSTMs with coarse-to-fine alignment[C]//Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. San Diego: NAACL, 2016: 720-730 [7] BAHDANAU D, CHO K, BENGIO Y. Neural machine translation by jointly learning to align and translate[C]//Proceedings of the 3rd International Conference on Learning Representations. San Diego: Computational and Biological Learning Society, 2015 [8] HOCHREITER S, SCHMIDHUBER J. Long short-term memory[J]. Neural Computation, 1997, 9(8): 1735-1780 doi: 10.1162/neco.1997.9.8.1735 [9] GU J T, LU Z D, LI H, et al. Incorporating copying mechanism in sequence-to-sequence learning[C]//Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Berlin: Association for Computational Linguistics, 2016: 1631-1640 [10] WISEMAN S, SHIEBER S M, RUSH A M. Challenges in data-to-document generation[C]//Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. Copenhagen: Association for Computational Linguistics, 2017: 2253-2263 [11] MA S M, YANG P C, LIU T Y, et al. Key fact as pivot: a two-stage model for low resource table-to-text generation[C]//Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence: Association for Computational Linguistics, 2019: 2047-2057 [12] GONG H, SUN Y W, FENG X C, et al. TableGPT: few-shot table-to-text generation with table structure reconstruction and content matching[C]//Proceedings of the 28th International Conference on Computational Linguistics. Barcelona: International Committee on Computational Linguistics, 2020: 1978-1988 [13] RADFORD A, WU J, CHILD R, et al. Language models are unsupervised multitask learners[OL]. [2019-02-15]. http://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.pdf [14] SU Y X, MENG Z Q, BAKER S, et al. Few-shot table-to-text generation with prototype memory[C]//Findings of the Association for Computational Linguistics: EMNLP 2021. Punta Cana: Association for Computational Linguistics, 2021: 910-917 [15] BU X, LUO B, SHEN C, et al. Forecasting high‐speed solar wind streams based on solar extreme ultraviolet images[J]. Space Weather, 2019, 17(7): 1040-1058 doi: 10.1029/2019SW002186 [16] HOSSEINI-ASL E, MCCANN B, WU C S, et al. A simple language model for task-oriented dialogue[C]//Proceedings of the 34th Conference on Neural Information Processing Systems. Vancouver: Curran Associates Inc., 2020: 20179-20191 [17] ARUN A, BATRA S, BHARDWAJ V, et al. Best practices for data-efficient modeling in NLG: how to train production-ready neural models with less data[C]//Proceedings of the 28th International Conference on Computational Linguistics: Industry Track. Online: International Committee on Computational Linguistics, 2020: 64-77 [18] HE J X, KRYŚCIŃSKI W, MCCANN B, et al. CTRLsum: towards generic controllable text summarization[C]//Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing. Abu Dhabi: Association for Computational Linguistics, 2022: 5879-5915 [19] LEWIS M, LIU Y H, GOYAL N, et al. BART: denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension[C]//Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Online: Association for Computational Linguistics, 2020: 7871-7880 [20] VASWANI A, SHAZEER N, PARMAR N, et al. Attention is all you need[C]//Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach: Curran Associates Inc. , 2017: 6000-6010 [21] RAFFEL C, SHAZEER N, ROBERTS A, et al. Exploring the limits of transfer learning with a unified text-to-text transformer[J]. The Journal of Machine Learning Research, 2020, 21(1): 140 [22] ROTHE S, NARAYAN S, SEVERYN A. Leveraging pre-trained checkpoints for sequence generation tasks[J]. Transactions of the Association for Computational Linguistics, 2020, 8: 264-280 doi: 10.1162/tacl_a_00313 [23] RUMELHART D E, DURBIN R, GOLDEN R, et al. Backpropagation: the basic theory[M]//CHAUVIN Y, RUMELHART D E. Backpropagation: Theory, Architectures, and Applications. Hillsdale: Lawrence Erlbaum Associates, 1995: 1-34 [24] KINGMA D P, BA J. Adam: a method for stochastic optimization[C]//3rd International Conference on Learning Representations. San Diego: Computational and Biological Learning Society, 2015 [25] HOLTZMAN A, BUYS J, DU L, et al. The curious case of neural text degeneration[C]//8th International Conference on Learning Representations. Addis Ababa: OpenReview. net, 2020 [26] CELIKYILMAZ A, CLARK E, GAO J F. Evaluation of text generation: a survey[OL]. arXiv preprint arXiv: 2006.14799, 2020 [27] LIN C Y. ROUGE: a package for automatic evaluation of summaries[C]//Text Summarization Branches Out. Barcelona: Association for Computational Linguistics, 2004: 74-81 [28] PAPINENI K, ROUKOS S, WARD T, et al. BLEU: a method for automatic evaluation of machine translation[C]//Proceedings of the 40th Annual Meeting on Association for Computational Linguistics. Philadelphia: ACM, 2002: 311-318 [29] CASCALLAR-FUENTES A, RAMOS-SOTO A, BUGARÍN A. Meta-heuristics for generation of linguistic descriptions of weather data: experimental comparison of two approaches[J]. Fuzzy Sets and Systems, 2022, 443: 173-202 doi: 10.1016/j.fss.2022.02.016 [30] CHEN Z Y, EAVANI H, CHEN W H, et al. Few-shot NLG with pre-trained language model[C]//Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Online: Association for Computational Linguistics, 2020: 183-190 [31] LI L, MA C, YUE Y L, et al. Improving encoder by auxiliary supervision tasks for table-to-text generation[C]//Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers). Bangkok: Association for Computational Linguistics, 2021: 5979-5989 [32] VAN DER LEE C, GATT A, VAN MILTENBURG E, et al. Best practices for the human evaluation of automatically generated text[C]//Proceedings of the 12th International Conference on Natural Language Generation. Tokyo: Association for Computational Linguistics, 2019: 355-368 -

-

下载:

下载: